One of the reasons why A/B testing is so important is because it allows you to assume more control over the experience you create for your users. Additionally, instead of going through different versions of your website until you stumble upon one that works, you can achieve measurable results.

However, A/B testing is not always completely accurate. Often, it's difficult to interpret the result and create solutions. Yet, despite some of it's shortcomings, A/B testing should be your bread and butter when it comes to figuring out what works best for your site and your audience. In order to avoid some of the most common pitfalls of A/B testing, below are the 9 most common testing mistakes everyone makes.

1. Stopping the Test Too Early

Let's say you have been testing two different versions of your website for four days, and the first version (A) is doing significantly better than the second (B). What do you do? In most cases, you will be tempted to end the test, because the results point to the best solution, right? Wrong! So how do you know when to end the test?

If you are doing the testing yourself, don't stop until one of the versions you are testing reaches at least 95% confidence. SumoMe tested three popup variations on their website. They found that the third popup has a 99.95% chance of being a winner. Although the second one stopped 82.97%, which would seem like a good number, you should always aim for at least 95% confidence, because then there is only a 5% chance that the result is not valid.

2. Testing without a Proper Hypothesis

You shouldn't perform A/B tests just because your competitors are doing the same, or simply try out ideas until you come across one that works. Before you start testing, you should have a hypothesis -- a clear idea that something will work, in which the only thing that is missing is actual proof. Without a hypothesis, there is not much which can be learned about your audience's behavior, thus making the test pointless.

So how do you come up with a hypothesis? There are tons of sources which can help you with that. For example, you can take a look at Google Analytics data, or some other analytics software, or heatmaps, which can show you the parts of your website most clicked on by readers. Valuable sources of information can also be surveys, polls or interviews you have conducted with your audience.

3. Not Taking into Account Failed Tests

A great example of this is a case study explained in detail by CXL. This is a perfect example of how much work you should do to achieve great conversion rates. CXL completed as much as 6 rounds of tests, and wound up using a version of the page that performed almost 80% better than the design they were using previously. After the first test, their new design performed only 13% better than the original. But, they kept on testing. In round two, the original beat the new version by 21%. In the end, they decided on a variation which performed almost 80% better, which proved the importance of testing until your find the right variation.

4. Tests Which Involve Overlapping Traffic

At some point, it must have occurred to you that you should run multiple A/B tests simultaneously. It's a great way to save time and money, but the trouble with that approach is that it can produce results which are severely misleading, since there is overlap in traffic between the pages you are testing. The solution would be to ensure an even distribution between the versions. If possible, avoid this, and do your tests one by one in order to get the most accurate results.

5. Not Testing All the Time

What you need to remember here is that you are running tests for a reason -- whether it's because you want to improve conversion rates, learn about user behaviour or boost your income, for example. The online world is continuously evolving, which means you need to keep up if you're going to keep your audience and customers.

6. Testing Without Significant Traffic or Conversions

Aside from having a hypothesis, you also need your sample to be large enough so that it has statistical significance when it comes to traffic and conversions, because only then can you get the best results. Otherwise, if version A is a lot better than version B, it's not going to be apparent when the testing is done. So, you will keep on testing, without getting proper results, which means you will be spending time and money which could have been put to better use somewhere else.

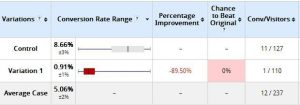

Let’s take a look at the study done by CXL. First, they have tested the control and variation against each other. After only two days of testing, the variation had no chance whatsoever of beating the control version, according to the software they were using, as can be seen in the screenshot below:

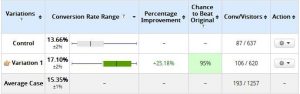

Yet, they didn’t call it quits, since the sample size was just only around 100 visits for each version. They kept on doing tests. Ten days later, the software produced to following results:

The variation, which had a 0% chance of winning after two days, was now winning by a landslide, and reaching the coveted 95% confidence number. However, the sample size was significantly larger (between 350 and 400 conversions per variation). This goes to show how important sample size is when doing your tests. But, the number is not always going to be between 350 and 400. In order to figure out how big a sample you really need, a calculator, which should point you in the right direction.

7. Testing while Changing Parameters

Once you have your test set up, with all its parameters, settings, variations, controls, and goals, you should proceed with testing without changing any of the above, even if you get a brilliant new idea in the process. For example, if you were to change the traffic distribution split between two pages, the old users would not be affected, but the new ones would cause the test to show significantly different, and inaccurate, results.

8. Including Too Many Variations in the Test

In theory, having more variations in your test should mean more accurate results. Not only will too many slow down your test, because it will take ages before you start to spot any real results, but you will also wind up with false positives. You may also get results which have no real statistical significance.

Let’s take Google’s 41 shades of blue test as an example. What they did was test 41 different shades of blue in order to figure out which one would get the most clicks. The trouble was that at 95% confidence, the likelihood of a false positive was close to 90%, which is a clear example of what happens when you have too many variations. Had they included only 2 shades, the chance of getting a false positive would have been only 5%, which is about the best you can hope for.

9. Not Taking Account Validity Threats

Here is where it gets tricky. You have the results, and all there is left to do is pick the best variation, since you have spent enough time testing with a statistically significant sample. Well, not exactly. You have to take into account every single metric that is relevant to your results, and in case some of the metrics you are tracking are not producing results, you have to find out why that is happening.

Conclusion

Testing is essential if you want your website to remain as effective as it is now. But, you need to think long and hard before each test and decide on all the parameters and variations. There are tools out there which can help you with that, but that doesn't mean that they are 100% accurate. The best starting point would be to avoid mistakes we have described in this article, because that's how you increase the accuracy of your tests.

on

Good piece, I totally agree with all of the saying above, those tests can be not as accurate as we wish